|

I am a Master's student at Sharif University of Technology in Computer Engineering, supervised by Prof. MohammadHossein Rohban in the RIML Lab. In recent years, I have been conducting research in the RIML Lab under the supervision of Prof. MohammadHossein Rohban, closely collaborating with Prof. Mohammad Sabokrou. Previously, I received my Bachelor of Science in Computer Engineering from Iran University of Science and Technology. During the final year of my Bachelor's, I was a research assistant at CVLab IUST, working under the supervision of Prof. Mohammad Reza Mohammadi. Email / Google Scholar / Github / LinkedIn |

|

|

|

|

|

I have extensive research experience in trustworthiness and reliability machine learning models and have a strong academic interest in developing robust machine learning algorithms, especially within the domains of computer vision and natural language processing (NLP). |

|

|

|

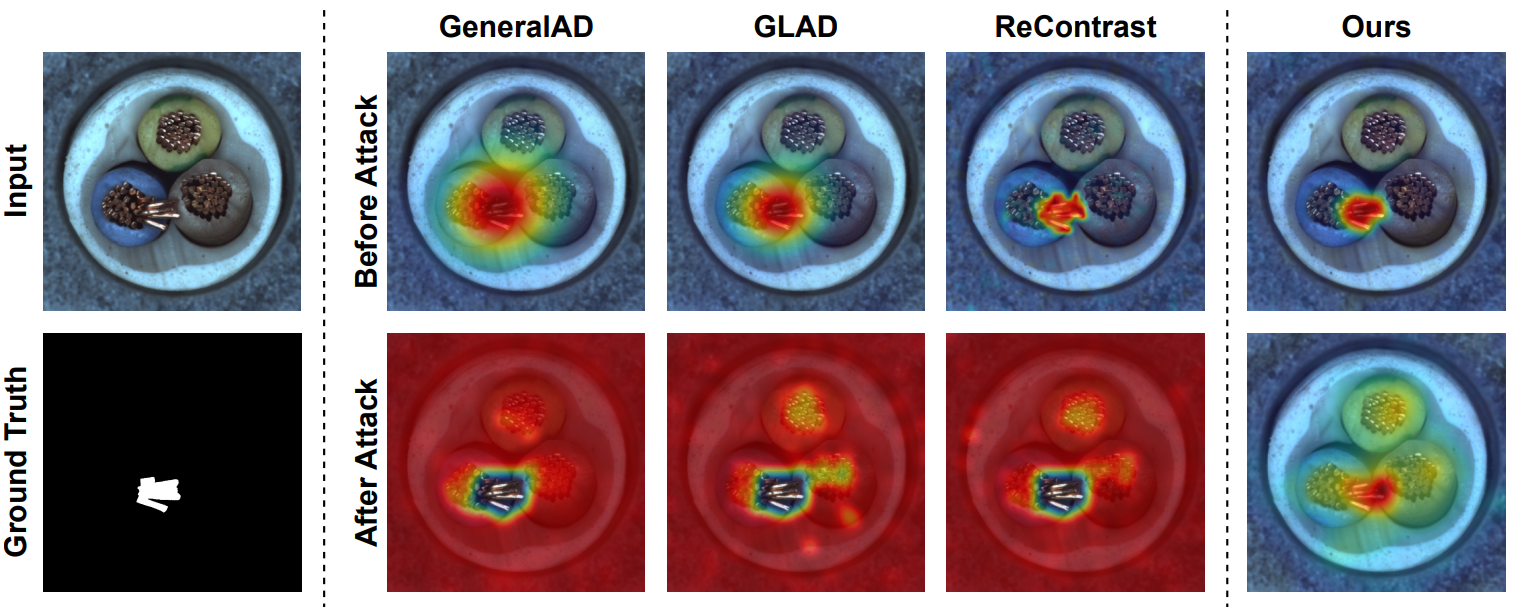

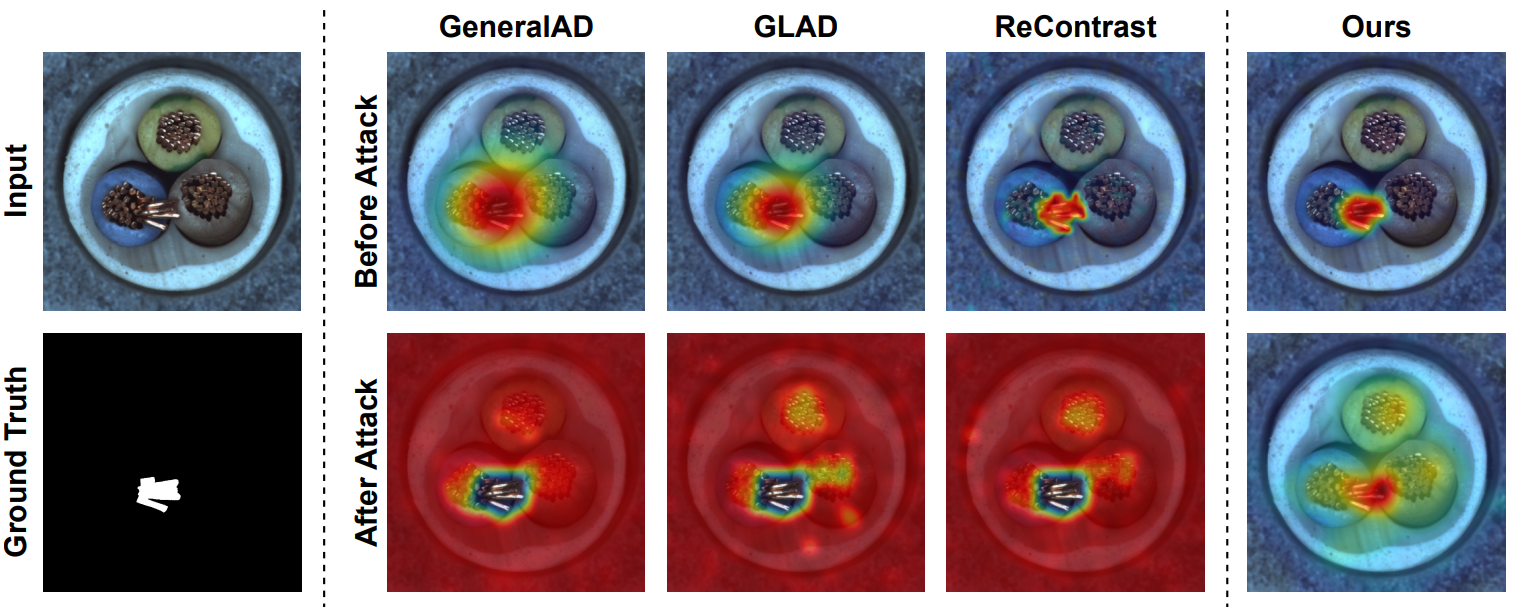

Mojtaba Nafez, Amirhossein Koochakian, Arad Maleki, MohammadHossein Rohban CVPR, 2025 Proceddings / code

PatchGuard is an adversarially robust Anomaly Detection (AD) and Localization (AL) method using pseudo anomalies in a ViT-based framework. It leverages Foreground-Aware Pseudo-Anomalies and a novel loss function for improved robustness, achieving significant performance gains on industrial and medical datasets under adversarial settings. |

|

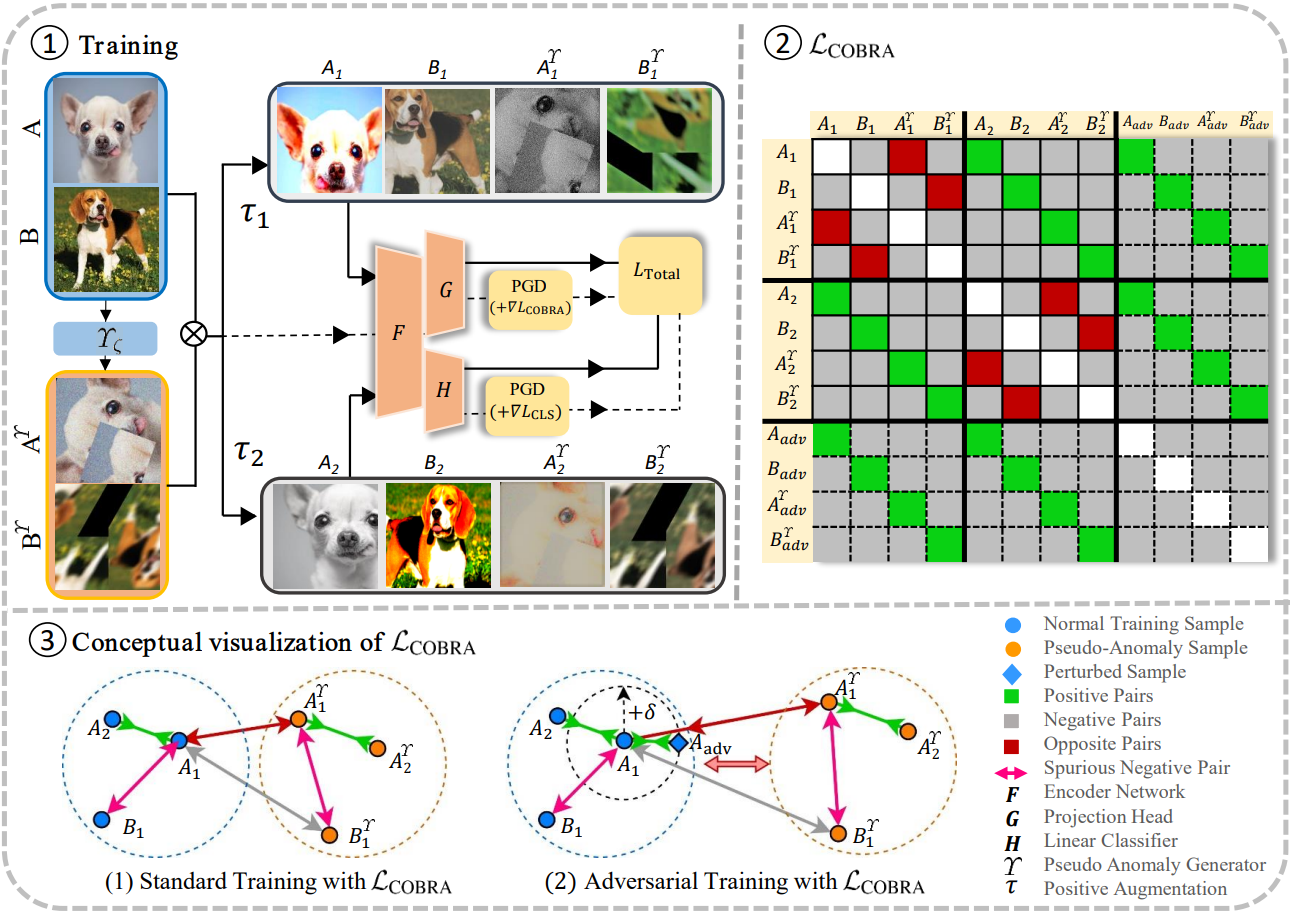

Hossein Mirzaei, Mojtaba Nafez, Jafar Habibi, Mohammad Sabokrou, MohammadHossein Rohban ICLR, 2025 openreview / code

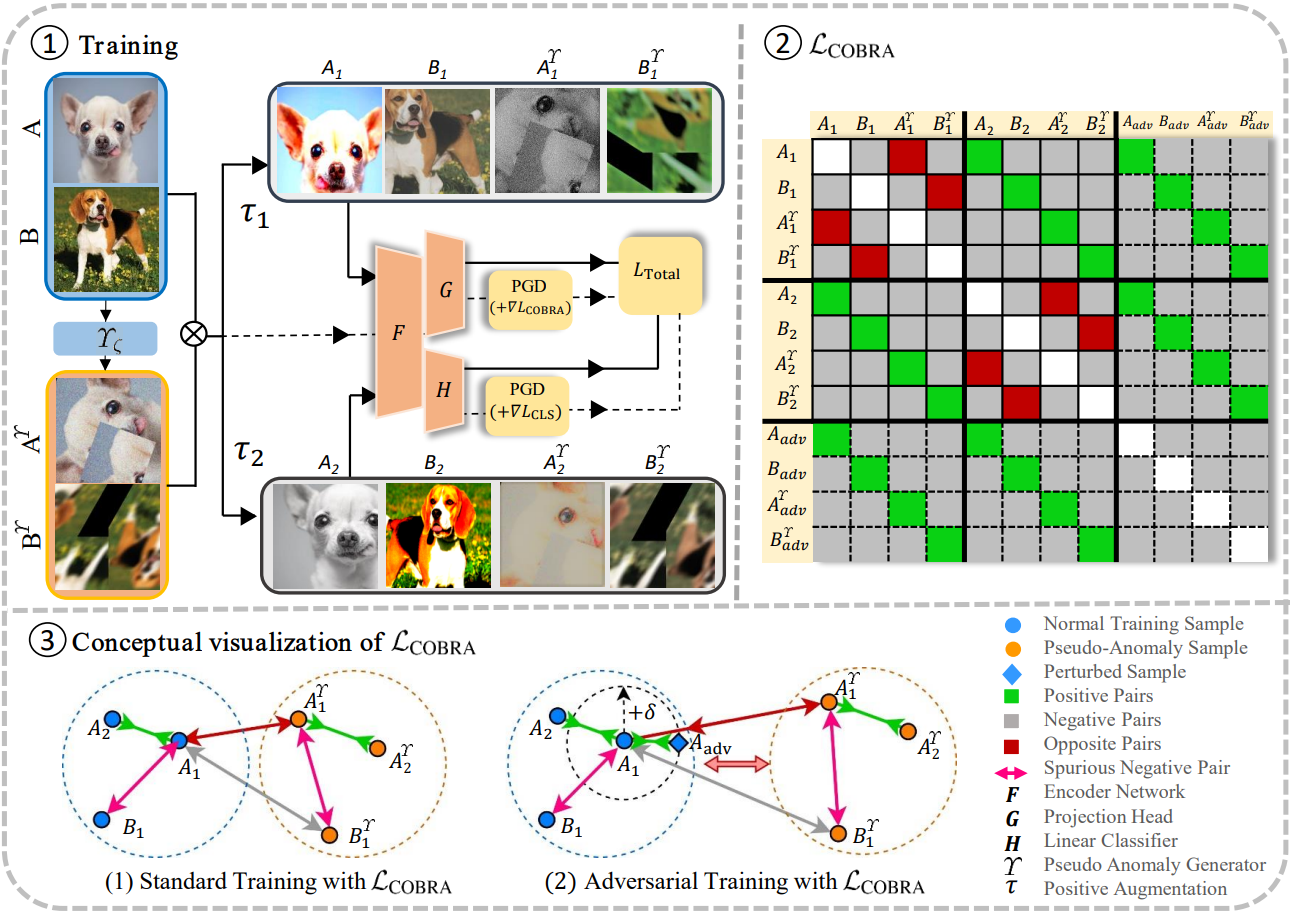

Anomaly Detection (AD) methods are vulnerable to adversarial attacks due to relying on unlabeled normal samples. The authors address this by creating a pseudo-anomaly group and using adversarial training with contrastive loss, mitigating spurious negative pairs through opposite pairs to improve robustness. |

|

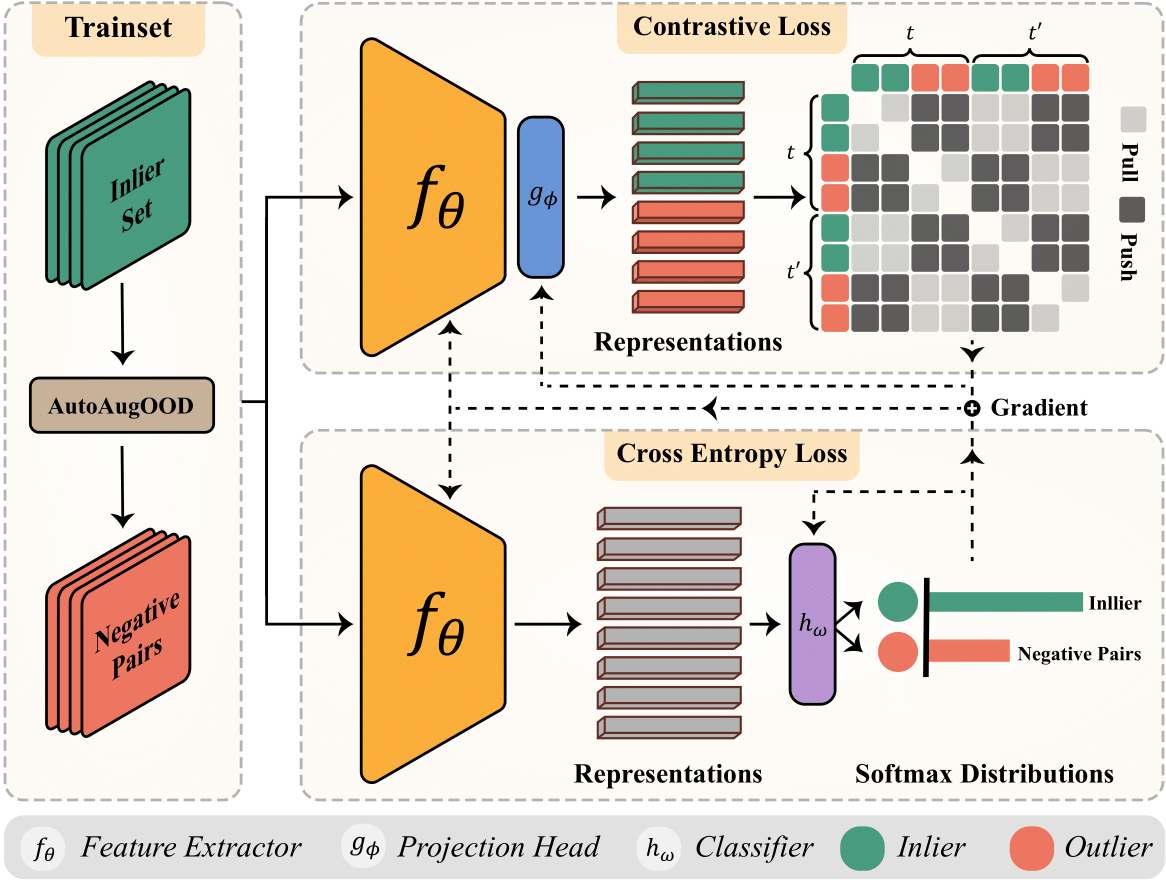

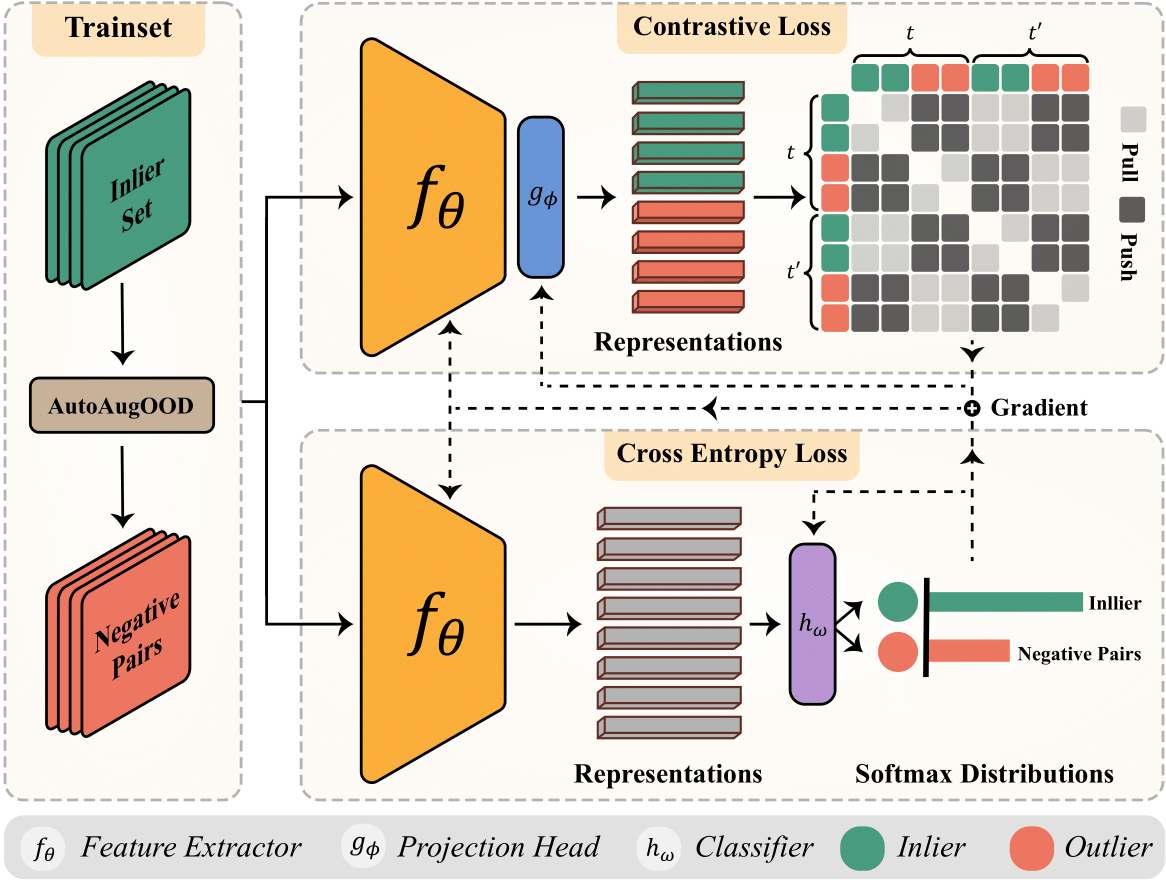

Hossein Mirzaei, Mojtaba Nafez, Mohammad Jafari, Mohammad Bagher Soltani, Mohammad AzizMalayeri, Jafar Habibi, Mohammad Sabokrou, MohammadHossein Rohban CVPR, 2024 arXiv / code

Addressing a critical practical challenge within the domain of image-based anomaly detection, our research confronts the absence of a universally applicable and adaptable methodology that can be tailored to diverse datasets characterized by distinct inductive biases. |

|

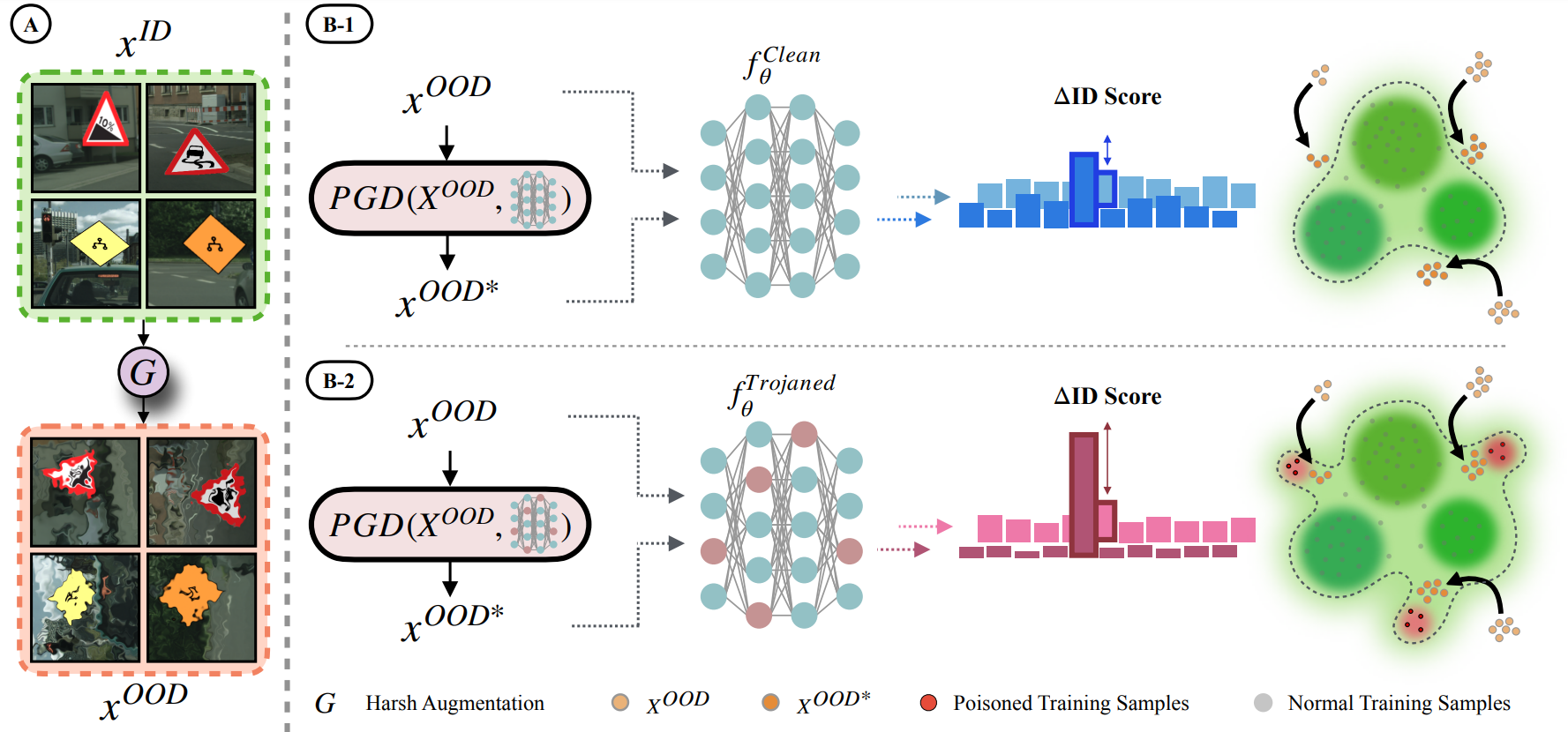

Hossein Mirzaei, Ali Ansari, Bahar Nia , Mojtaba Nafez, Moein Madadi, Sepehr Rezaee, Zeinab Taghavi, Arad Maleki, Kian Shamsaie, Hajialilue, Jafar Habibi, Mohammad Sabokrou, Mohammad Hossein Rohban NeurIPS, 2024 arXiv / code

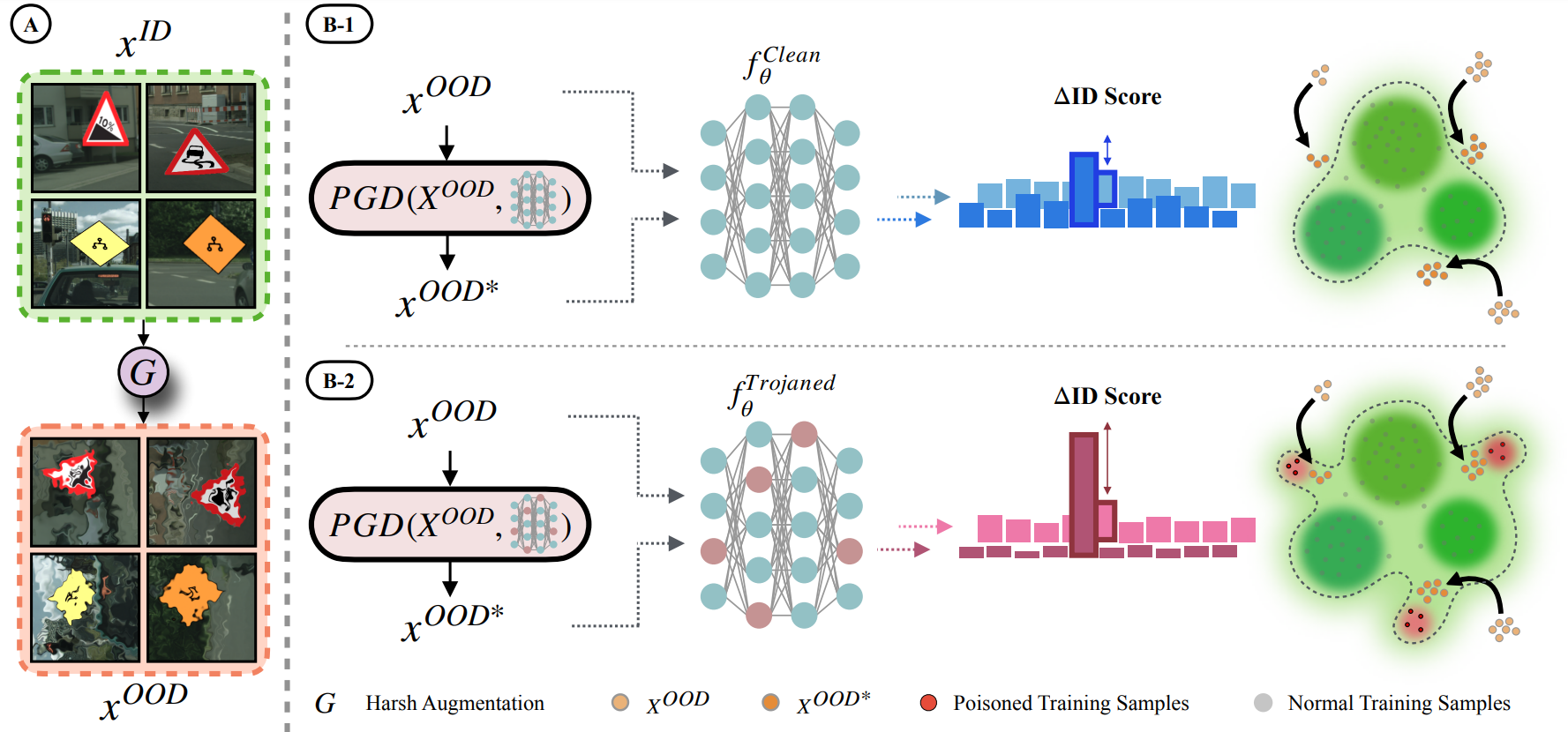

In this research, our problem was to identify whether a given model was backdoored or not. The study finds that backdoored models exhibit jagged decision boundaries around out-of-distribution (OOD) samples, leading to reduced robustness. |

|

|

Design and source code from Jon Barron's website. |