|

I am a first-year Ph.D. student at École Polytechnique Fédérale de Lausanne (EPFL), Switzerland, and a Research Assistant in the Natural Language Understanding (NLU) group at the Idiap Research Institute. I received my M.Sc. degree in Computer Engineering from Sharif University of Technology, where I conducted research in the Robust and Interpretable Machine Learning (RIML) Lab under the supervision of Prof. MohammadHossein Rohban , and collaborated closely with Dr. Mohammad Sabokrou . Previously, I received my Bachelor of Science in Computer Engineering from Iran University of Science and Technology. In my final year, I worked as a research assistant at CVLab IUST under the supervision of Prof. Mohammad Reza Mohammadi . Email / Google Scholar / Github / LinkedIn |

|

|

|

|

|

|

|

|

I have extensive research experience in the trustworthiness and reliability of machine learning models, particularly in the domains of computer vision (image and video) and automatic speech recognition. I am now motivated to expand my work toward Large Language Models (LLMs). |

|

|

|

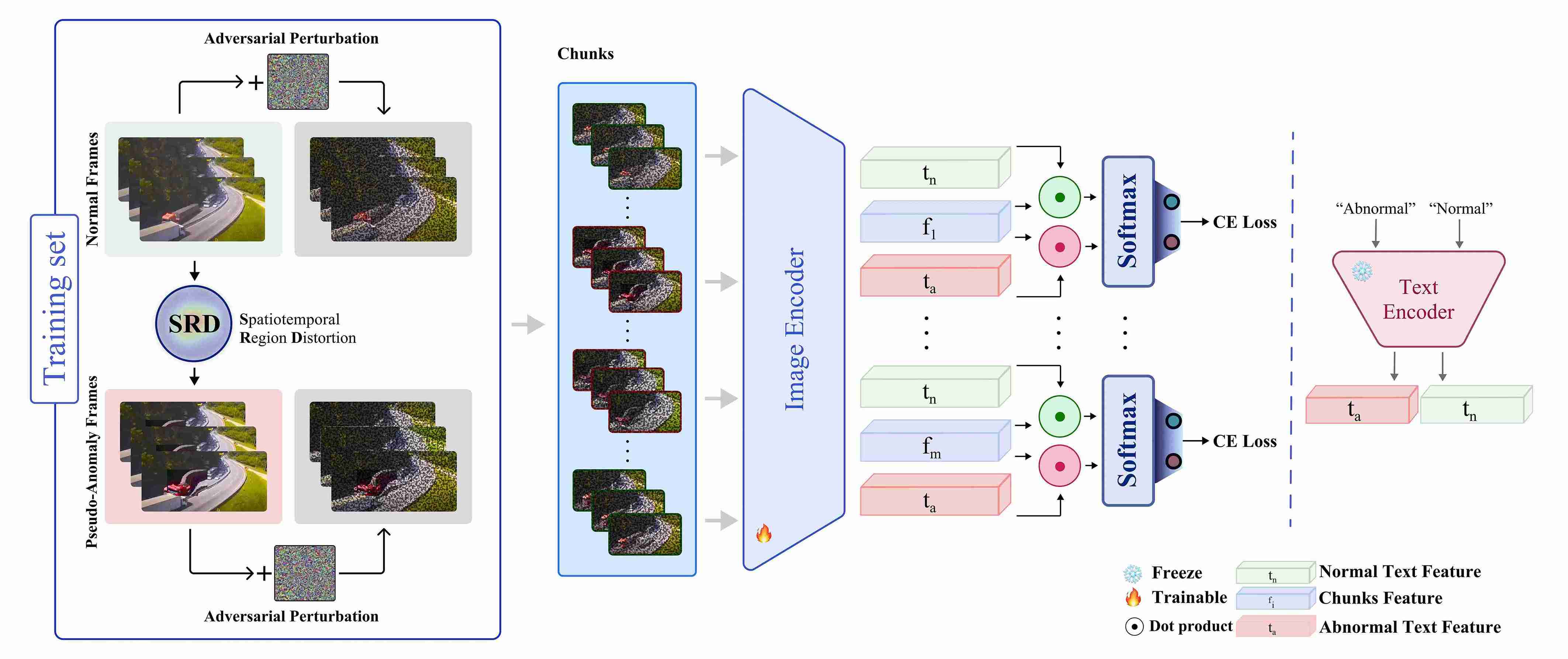

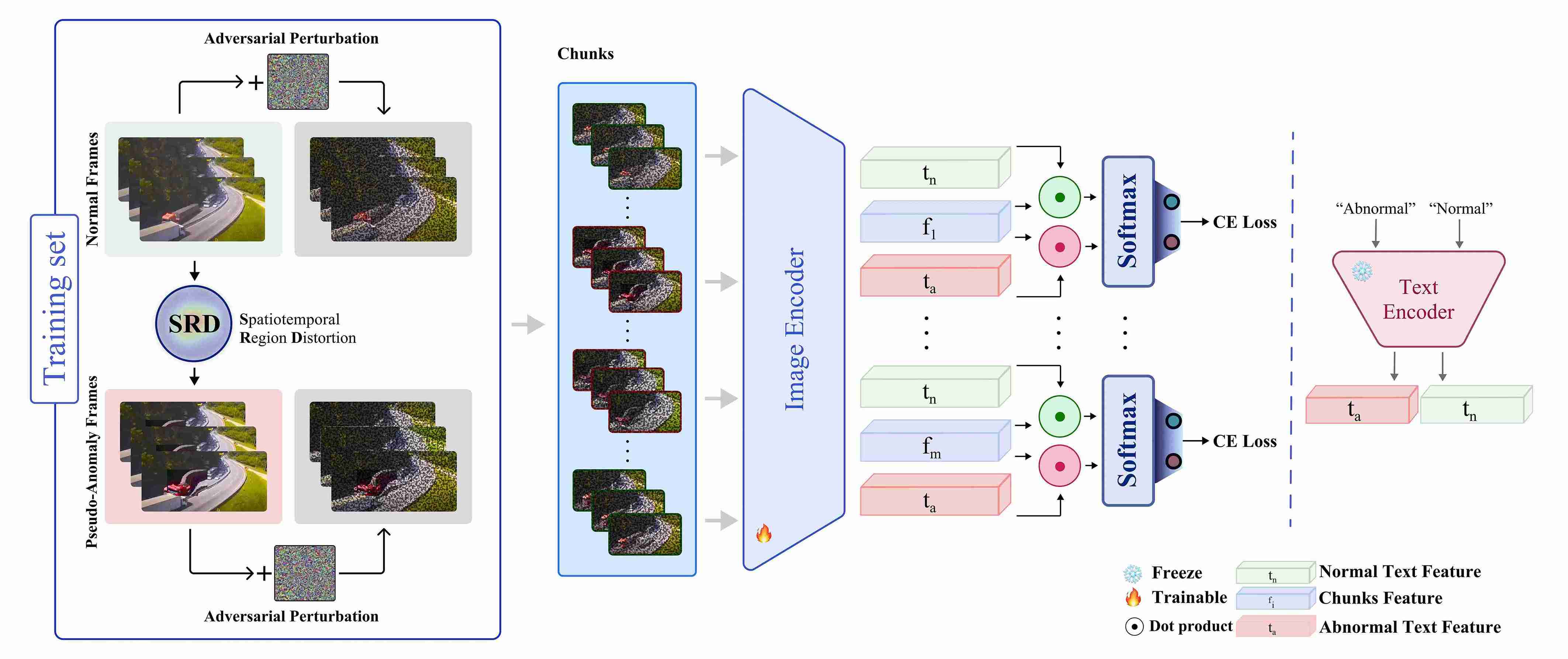

Mojtaba Nafez, Mobina Poulaei, Nikan Vasei, Bardia Soltani Moakhar, Mohammad Sabokrou, MohammadHossein Rohban NeurIPS, 2025 Arxiv / code

Weakly Supervised Video Anomaly Detection (WSVAD) models are vulnerable to adversarial attacks, and standard defenses fail under weak supervision. We propose SRD, a pseudo-anomaly generation method that creates temporally consistent synthetic anomalies to reduce pseudo-label noise and enable effective adversarial training. Our approach improves robustness, outperforming prior methods by 71.0% AUROC on average across benchmarks. |

|

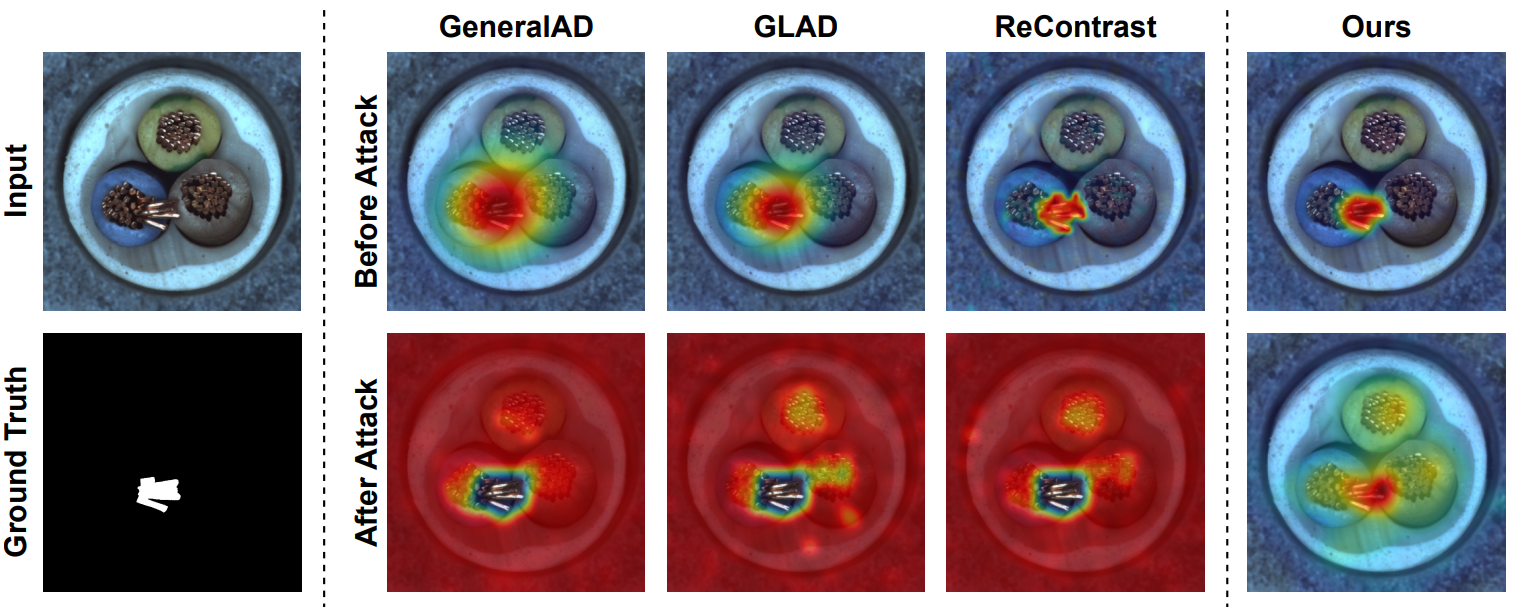

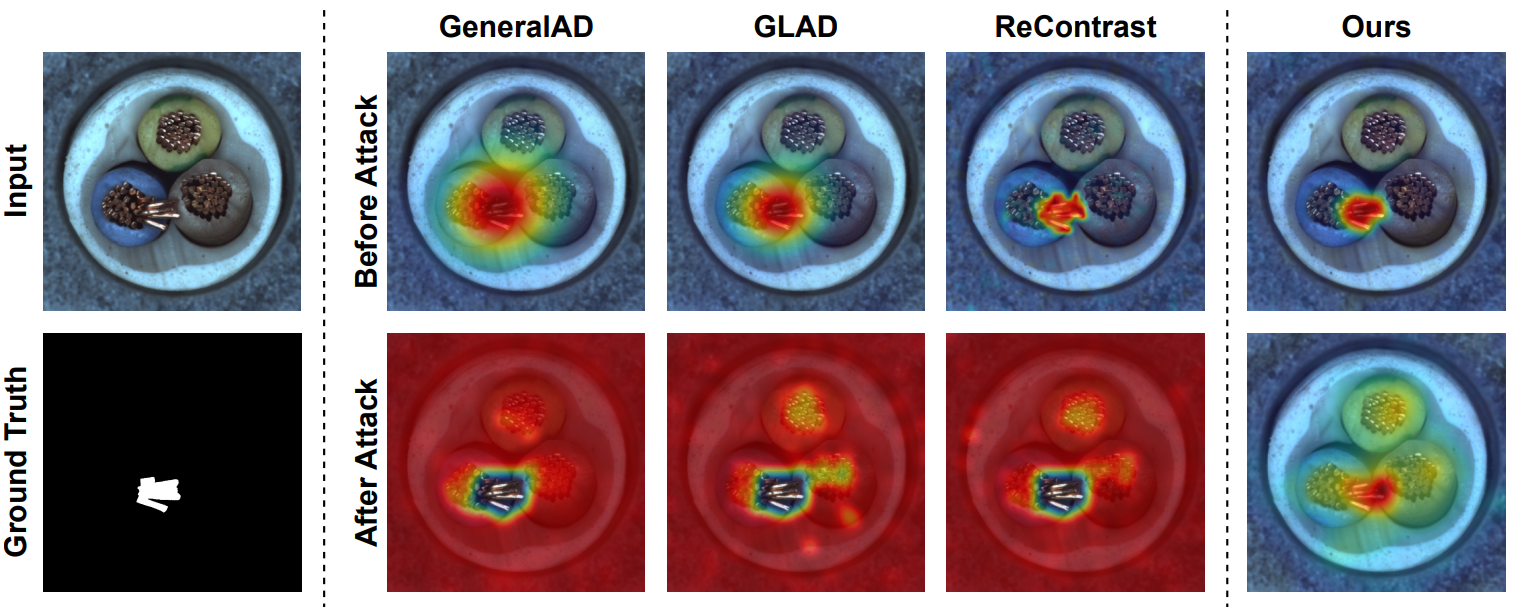

Mojtaba Nafez, Amirhossein Koochakian, Arad Maleki, MohammadHossein Rohban CVPR, 2025 Proceddings / code

PatchGuard is an adversarially robust Anomaly Detection (AD) and Localization (AL) method using pseudo anomalies in a ViT-based framework. It leverages Foreground-Aware Pseudo-Anomalies and a novel loss function for improved robustness, achieving significant performance gains on industrial and medical datasets under adversarial settings. |

|

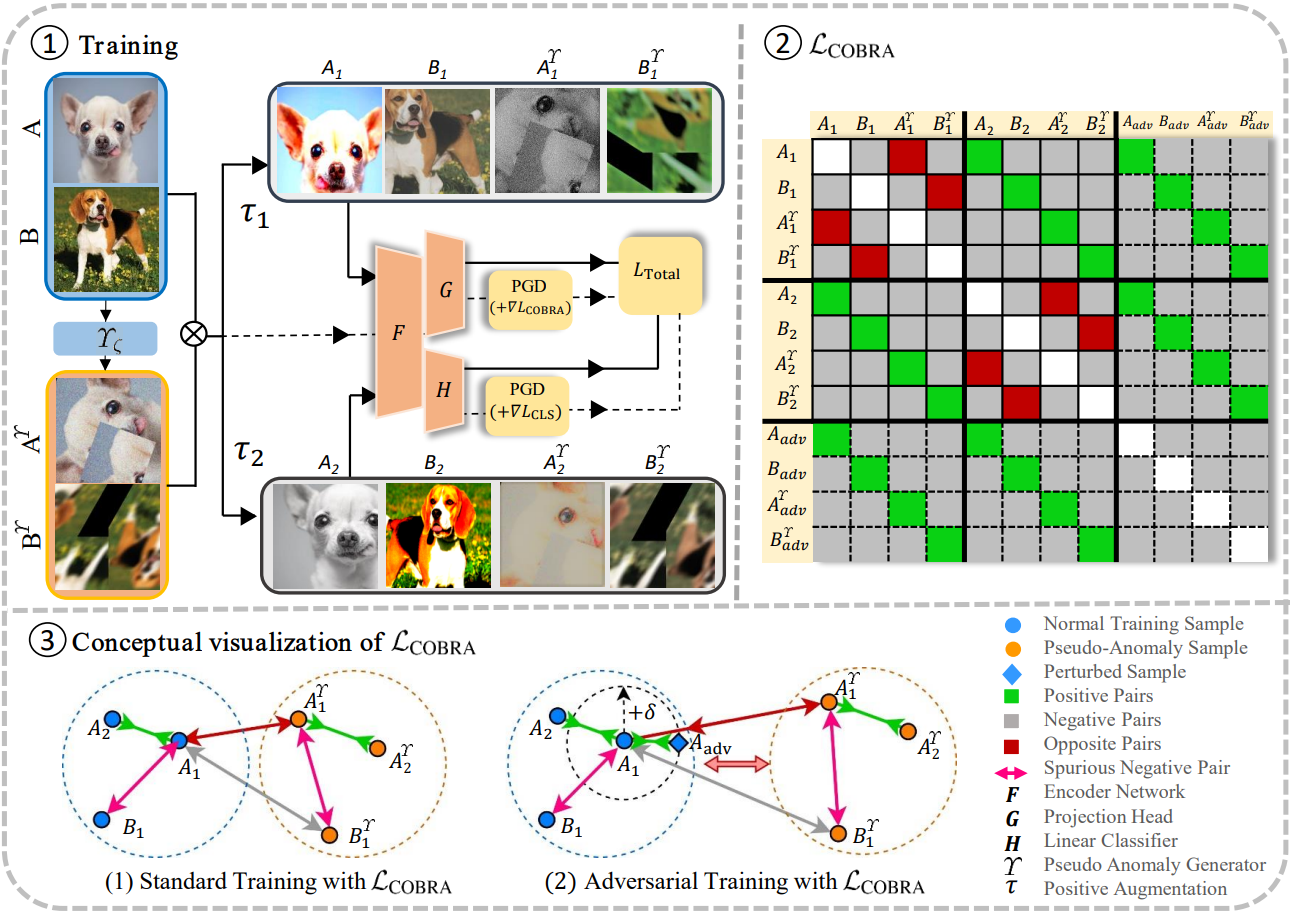

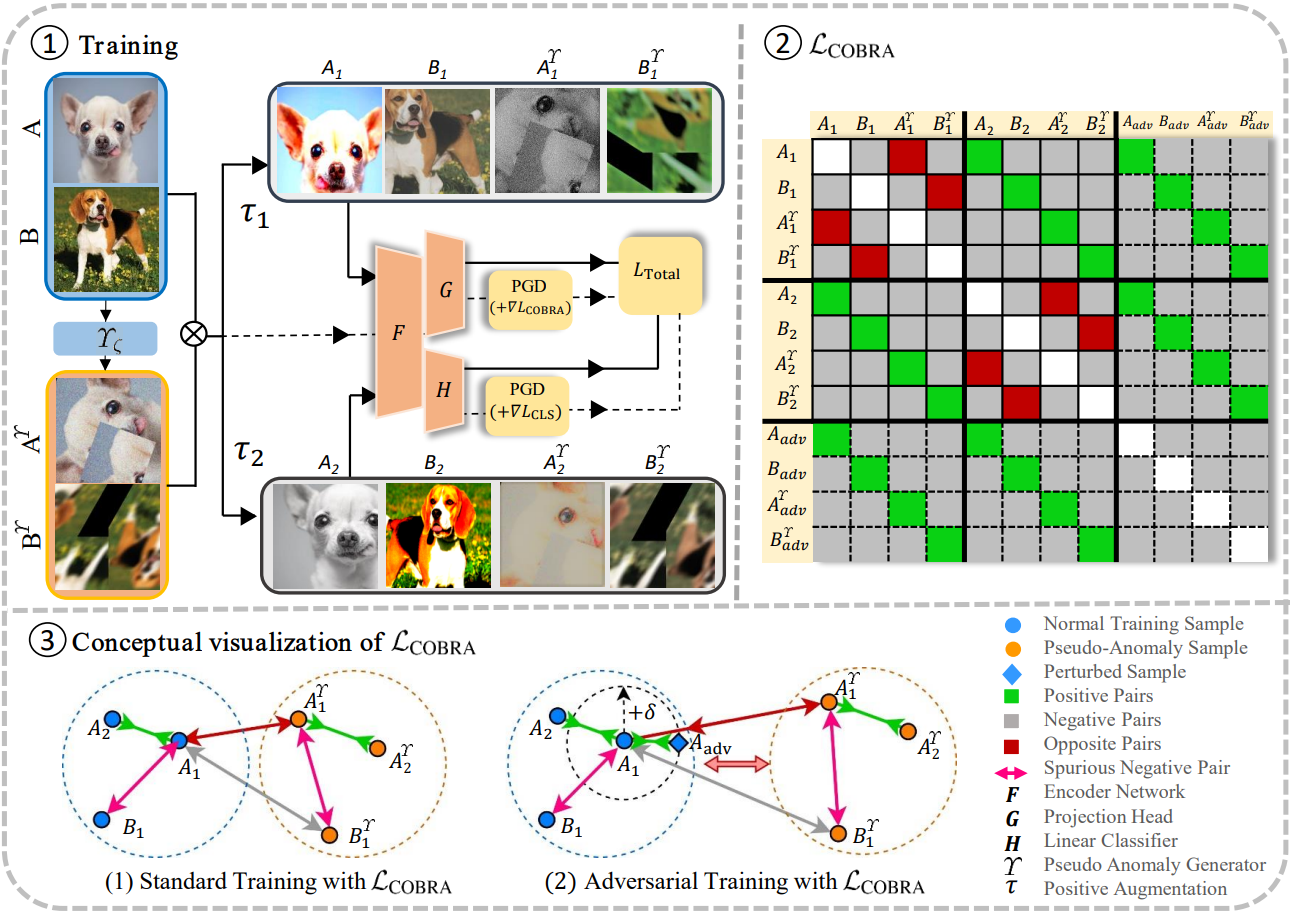

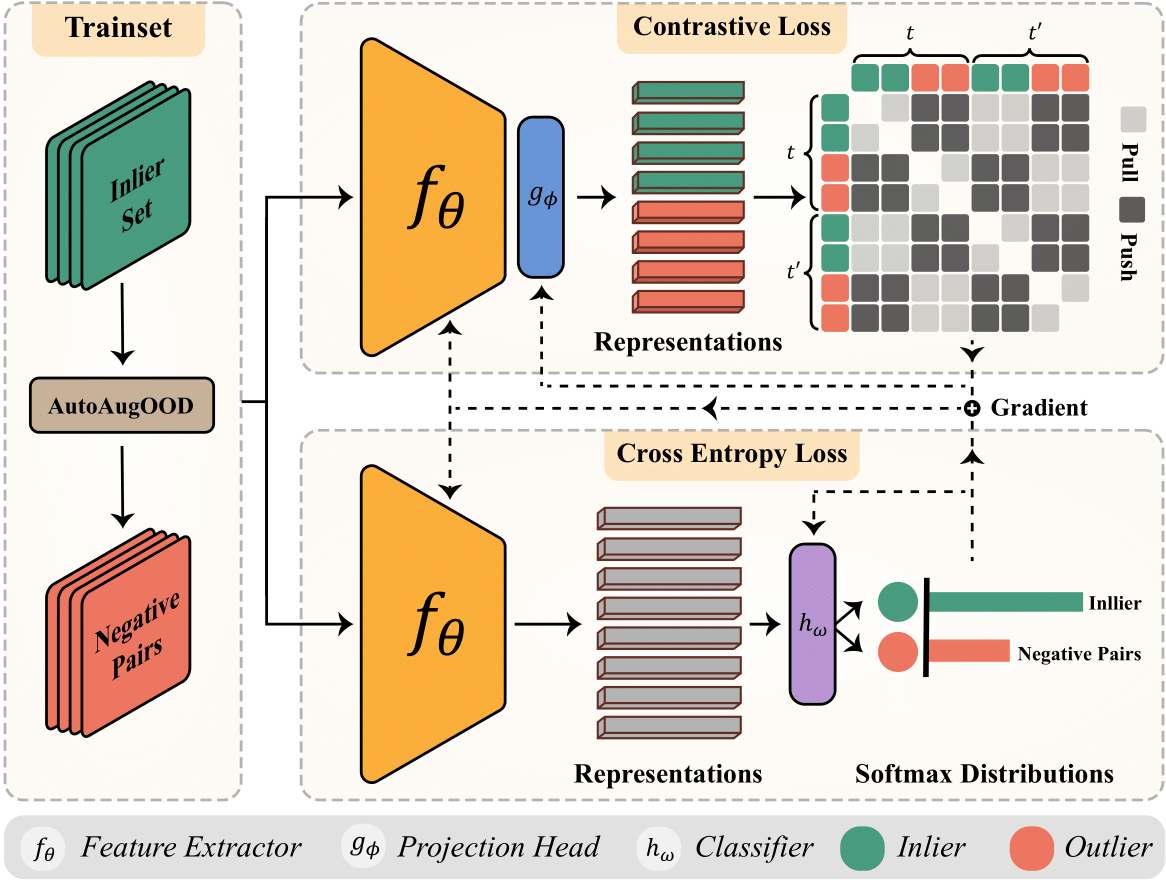

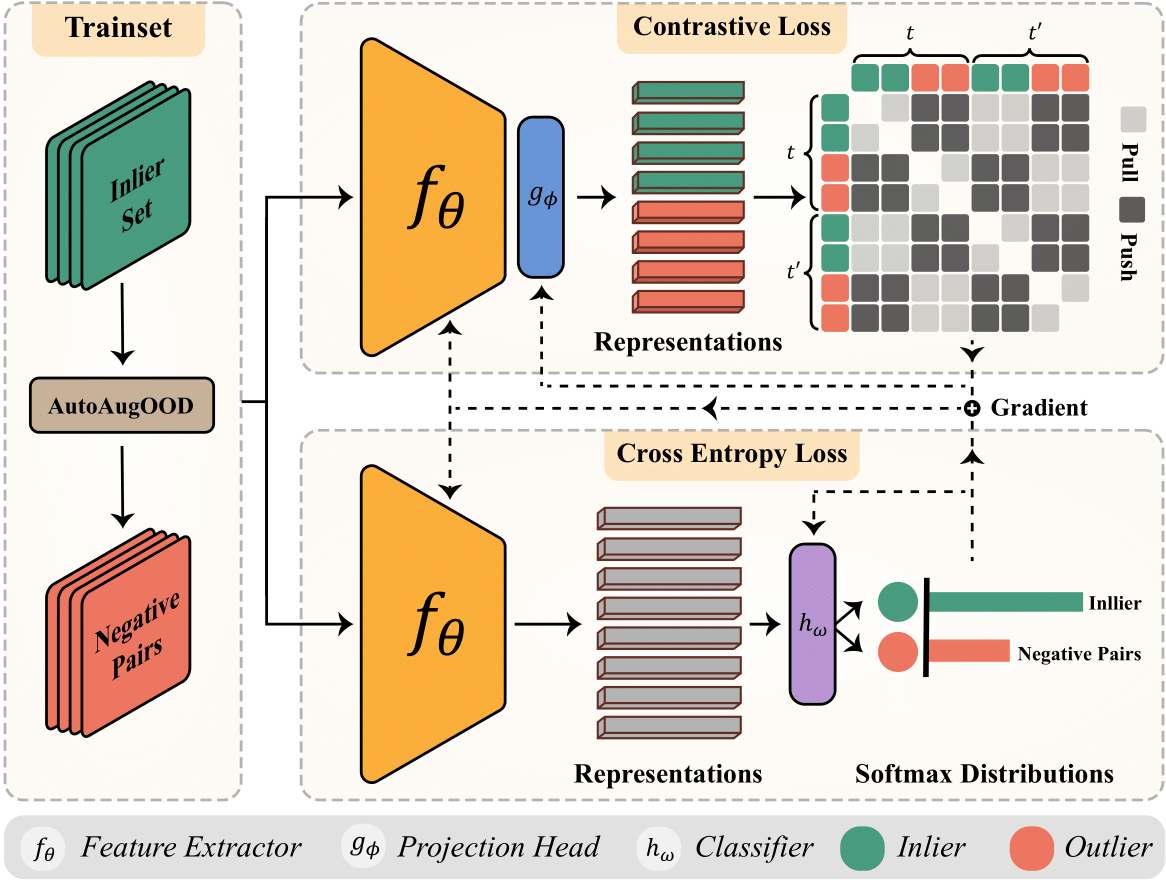

Hossein Mirzaei, Mojtaba Nafez, Jafar Habibi, Mohammad Sabokrou, MohammadHossein Rohban ICLR, 2025 openreview / code

Anomaly Detection (AD) methods are vulnerable to adversarial attacks due to relying on unlabeled normal samples. The authors address this by creating a pseudo-anomaly group and using adversarial training with contrastive loss, mitigating spurious negative pairs through opposite pairs to improve robustness. |

|

Hossein Mirzaei, Mojtaba Nafez, Mohammad Jafari, Mohammad Bagher Soltani, Mohammad AzizMalayeri, Jafar Habibi, Mohammad Sabokrou, MohammadHossein Rohban CVPR, 2024 arXiv / code

Addressing a critical practical challenge within the domain of image-based anomaly detection, our research confronts the absence of a universally applicable and adaptable methodology that can be tailored to diverse datasets characterized by distinct inductive biases. |

|

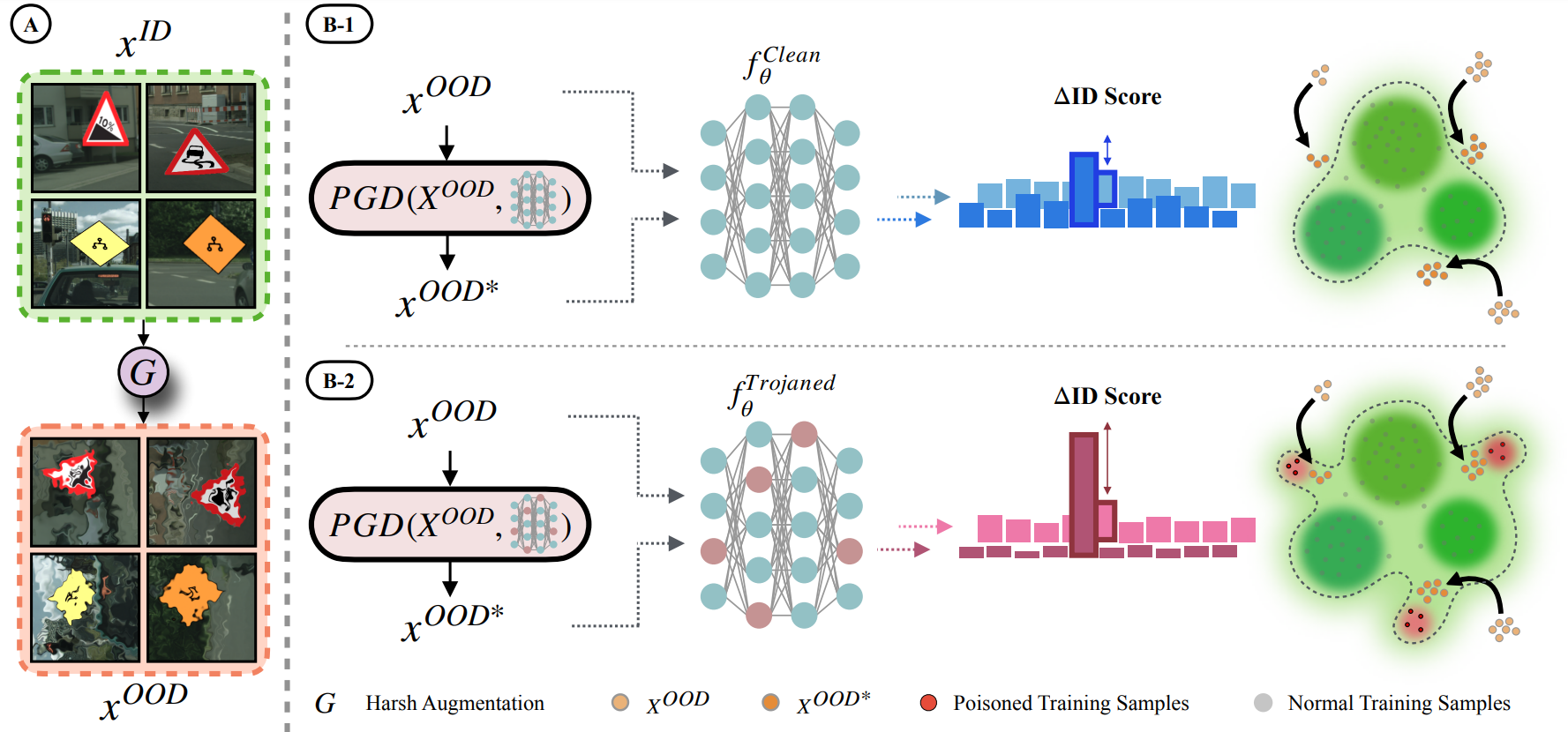

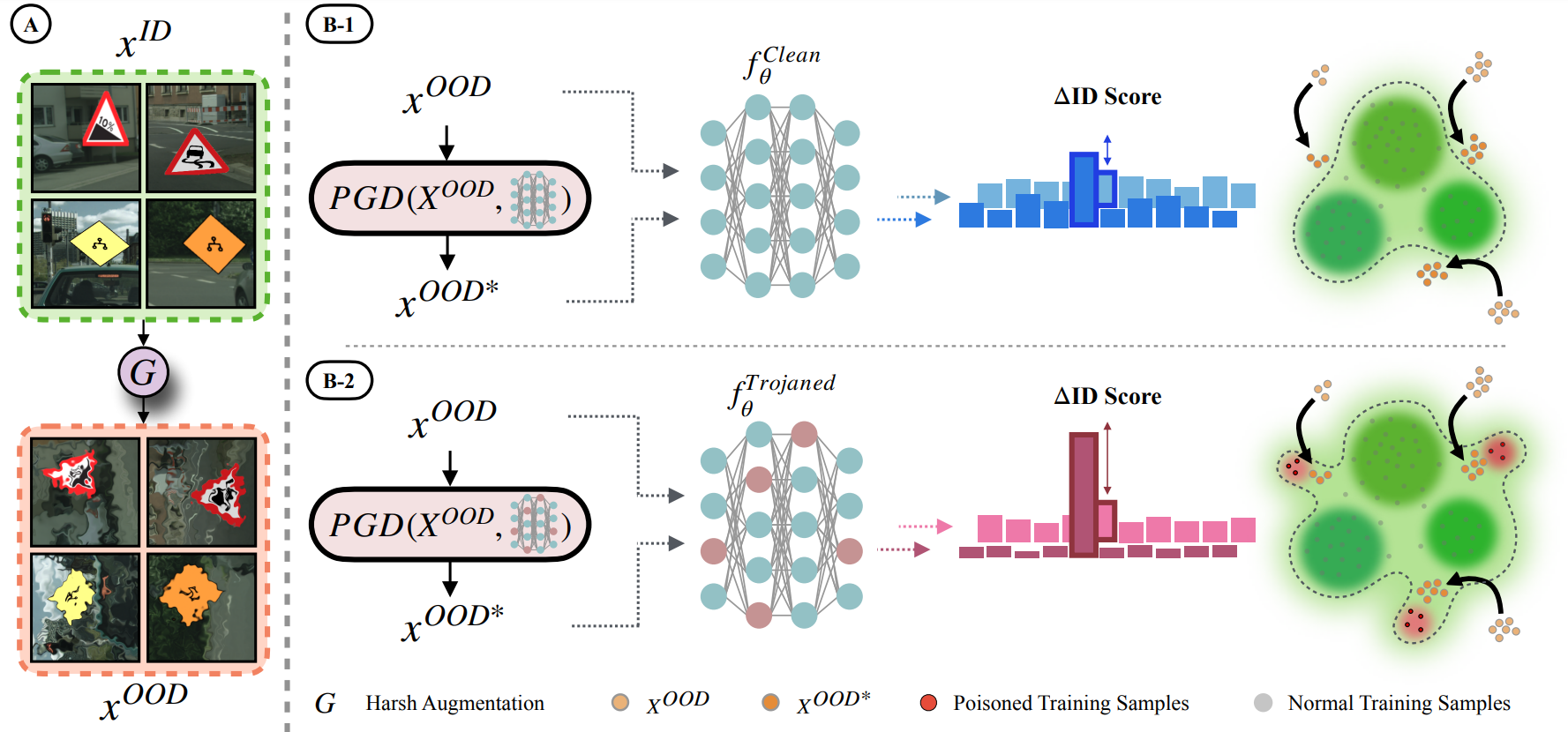

Hossein Mirzaei, Ali Ansari, Bahar Nia , Mojtaba Nafez, Moein Madadi, Sepehr Rezaee, Zeinab Taghavi, Arad Maleki, Kian Shamsaie, Hajialilue, Jafar Habibi, Mohammad Sabokrou, Mohammad Hossein Rohban NeurIPS, 2024 arXiv / code

In this research, our problem was to identify whether a given model was backdoored or not. The study finds that backdoored models exhibit jagged decision boundaries around out-of-distribution (OOD) samples, leading to reduced robustness. |

|

|

Design and source code from Jon Barron's website. |